Appius attended BigDataLDN 2025 and we bring you our key takeaways from the UK's biggest Data Community and data technology event.

Read on to check out the things you really need to know for your data strategy, some clues as to the brands who are really 'walking the walk' with AI and Big Data strategies.

1. Agentic Ai is the future

If we were to summarise Big Data LDN 2025 in 2 words it would be ‘Agentic AI’ it was undeniably the hottest topic of the year, but what is it? Agentic AI can be described as autonomous systems designed to make decisions and act upon those, communicating to many pieces of software. More than just the LLM’s we are all used to by now, such as chat GPT and Gemini, agentic AI may use LLM’s to perceive reason and plan but interacts with in built tools to get tasks done.

An example given by Yasmeen Ahmen, on the Google Cloud team, prompted the Agent to review a file of potentially fraudulent transactions. The agent then, loaded the file, queried the data, compared against a historical table, flagged which transactions seemed fraudulent, paused the accounts of this user, then created a ticket in a separate platform adding in all the details of the transaction for review and sent an email to the user letting them know of potential fraudulent activity on their account with next steps. It did this in under a minute whilst writing out and telling the initial prompter the steps it was taking.

Well designed agents will be able to automate tasks, saving large amounts of time for a business and fully leverages the tools they are integrated with.

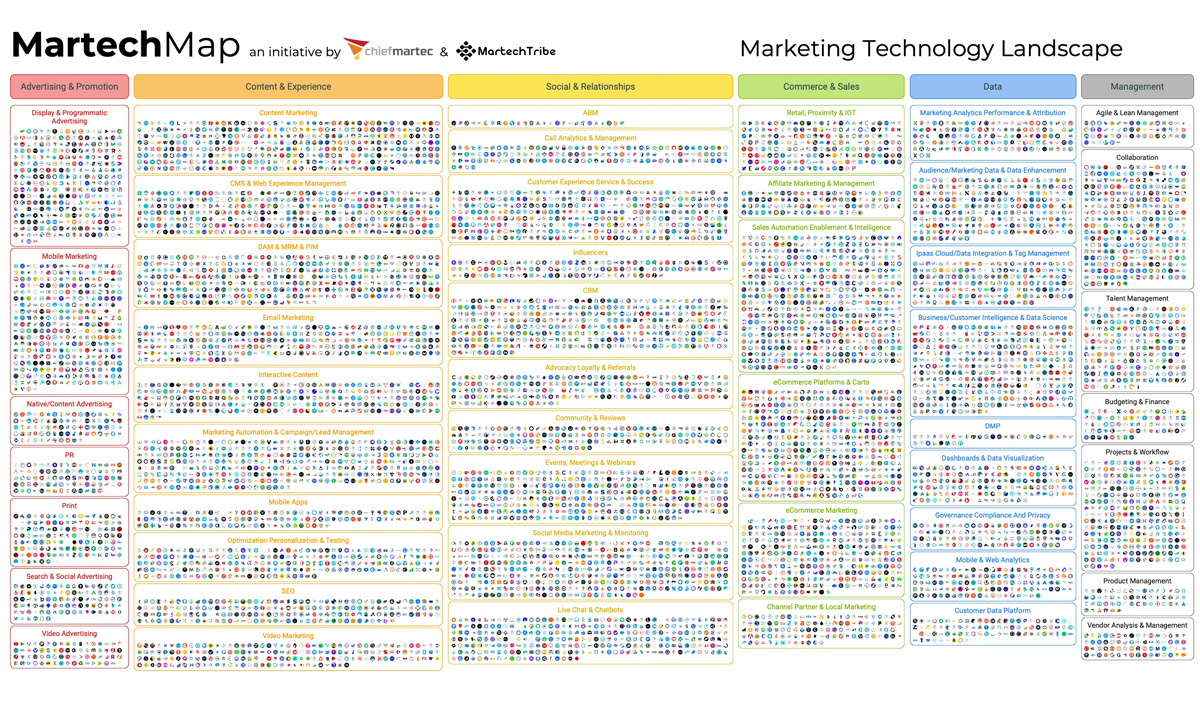

2. Using the right tool for the Job, expand your data toolkit.

You wouldn’t drill a hole with a Saw so why are we using LLM’s for everything?

LLM’s like ChatGPT and Gemini have become quite synonymous with peoples understanding of what AI is, but there is a whole field of machine learning models that can be more effective and efficient at specific tasks. If you ask an LLM to find hidden structures in a dataset, it will be less affective than a K-means or DBSCAN model.

This doesn’t just apply to ML models however, aspects of how you store the data. John Swain, from Google Cloud Data Analytics, had a seminar on Graph, an underutilised method of storing data that can express a simple representation of entities (nodes) and relationships (edges) between them, much better than a typical sequential database.

Having the right tool for the job can improve the accuracy, speed, cost and usage levels of any data project. Understanding the problem at hand and what is the right tool for the job is a difficult process, which is why the next key takeaway is so important.

3. Importance of planning data projects to get the most out of them. Data is a product not a feature

The tech industry has been very guilty of putting a product live then, at the last minute or after go-live, flagging that we need tracking and stats on the usage of this product. This leads to sub optimal tracking, pulling data from a front end rather than having it pushed to a data layer, a delay in identifying major failing points, an impossibility to get some data points and a very upset data team.

The earlier you can get data experts into the planning process, the more chance of success around tracking. As early as possible is the key, data teams should be part of the designing process so they can design the data capture to fit the solution.

In a seminar named, how to turn around a failing data team – Tales from consulting, Ben Rogojan stated that some of the failures viewed in the data team are lack of ownership, over engineering or wrong sizing, outputs without outcomes and decision avoidance. A data owner is key to make those decisions and ensure the data is actionable in the end solution, whilst early planning helps eliminate incorrect sizing.

4. All your data in a single place

Data needs to be in a place where it is actionable. When working with multiple sources this can be challenging and manual when this data is stored and kept within different services. Databricks, Snowflake, Fabric, BigQuery are all platforms which are resolved around bringing data together, so it is actionable for data analysts and data scientists.

First step is putting all your data somewhere, no matter the format. This is why the data lake architecture has become so popular as it is a large pool of unique data sources not needing to be in a specific format. You would then do any transformations required on the data within the lake to get it in the format needed for analysis. Using a Data Vault, Medallion Architecture or any other framework can help keeping this lake organised.

Having all your data in a place like a data lake, runs the risk of it becoming a data swamp without proper structure and rules. A data swamp is when a data lake loses structure, governance and trust, leading it to become difficult to search, and full of inconsistent and low-quality data. It ends up being a money pit as you are paying for the upkeep but not extracting any value out of it. To avoid these scenarios, you can; adopt a layered architecture, implement a data catalogue, enforce data quality rules, implement governance & access control, track data lineage, automate and version control the inflow of data and implement a data lifecycle management system.

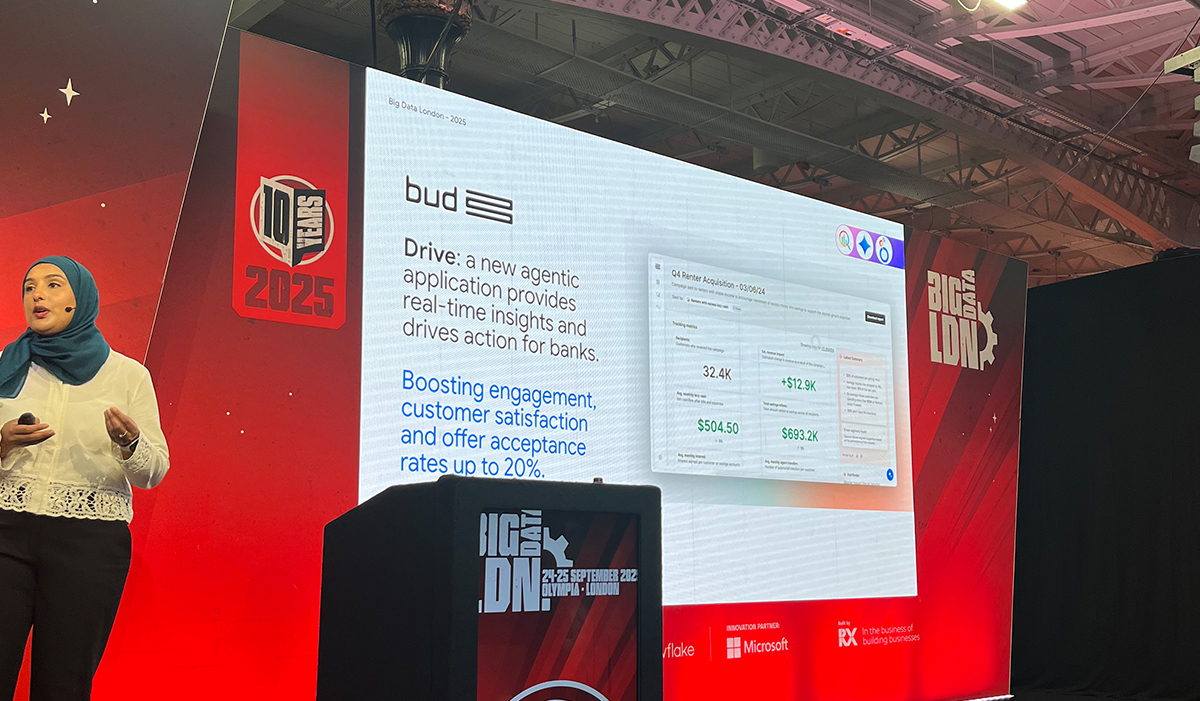

5. End consumer AI use cases were much increased

We at Appius always keep a close eye on who is delivering AI to real people outside their organisation, and there was a noticeable increase in real case studies this year. Here's a flavour:

- Morrisons using agentic AI from Google for an in store product finder to replace the biggest drain on staff time

- Google featuring again with cutting family burger orders from 89 seconds average with conventional app interface to 33 seconds average with conversational AI

- Gousto deploying a 'medallion' data model of bronze, silver, gold DBT mesh data layer with Gold layer making recommendations to customers from the 200+ recipes

- Keyloop delivering a much improve experience to car dealership customers - sending automated comms to customers around services and AI anticipating service needs for dealer service reps on the phone instead of asking the customer 'what service does it need'

6. Professor Brian Cox compared the universe to a supercomputer

We were treated to this comparison and so much more from our keynote closing speaker:

- its taken 13 billion years for some light to reach is from the birth of the universe as we know it

- galaxies were formed earlier than we thought

- the James Webb telescope has picked up the baton from Hubble to reveal more secrets

- it is believed that the universe was less than atom sized before the big bang, with quantum mechanical fluctuations in that object

- black holes distort space time

- M87 a huge black hole shows how black holes 'destroy information' or re-imprint it as data in the universe via thermal release

- AI has been in use for Astro Physics for years, even writing programs for quantum computers to process data

7. 60% of AI projects are failing to deliver on business objectives ....

...according to a recent Gartner report. 55% are delaying due to data concerns. It was clear to us that internal AI projects were currently far more successful that ones facing outwards from enterprise organisations. Alteryx gave a great presentation of 15 real AI use cases in 15 mins and repeated this across a number of sectors or use case areas like 'Marketing'. Almost all were internal business processes being improved or made more efficient. Time to look inside your organisation for AI 'wins' - do you have the data scientists and 'agentic' toolkit to get these gains. If you dont, Appius can advise.

8. The Future of BI

In the world of data analytics, reporting is more accessible than ever. Over the past few decades, we’ve seen the democratisation of BI and reporting evolve from SQL specialists to dashboards to self-service tools. A current hot topic is Conversational BI, which enables non-technical users to interact with data using natural language. This reduces reliance on data analysts and speeds up decision-making. Several new technologies are emerging in this space, including Data Bricks Genie, which goes beyond simple Q&A toward Compound AI that understands data structures and business logic at scale.

9. The Rise of Agentic Analytics

As with other disciplines showcased at Big Data London, analytics hasn’t escaped the wave of agentic hype. In this space, it’s appearing under the creative name "Agentic Analytics”. Agentic analytics promises a tireless analyst that collaborates with real analysts to handle routine tasks and generate insights. Tableau showcased its approach, leveraging features like data preparation, Q&A, monitoring, and insights through integration with Salesforce’s platform. These capabilities enable an intelligent agent that can be proactive not just reactive.

10. Data Middle Men

Lastly, there’s the continuing growth of analytics engineering as a field distinct from data analytics and data engineering. Analytics engineers focus on modelling and transforming data for broader use, applying software engineering practices such as version control and documentation to deliver reliable, reusable outputs.

Three Key Takeaways:

- Make your data clean and ready for use by agents and BI users

- Get business users talking to data

Start collaborating with AI Agents to decrease time to action

At Appius we have so much more information on these subjects, plus case studies available on request, and can put you in touch with the source of the insights and how to get access to videos of presentations etc. Just contact us for a no obligation chat or information exchange on any or all of the points in the key takeaways. Our expert strategists and data scientists in our Experience Team would love to share thoughts in this progressive and exciting arena, and help you find the solutions and opportunities that relate to your current status and organisational needs

About Author

Since joining Appius in 2022, Harry has led all of our work with GA4, Google Tag Manager, Google BigQuery and Looker Studio. This involves carefully constructed frameworks of tracking and insight across digital marketing campaigns, website and app usage and across multiple client systems and interfaces. Without the skills of a data scientist like Harry and the others in the Appius team, you are unlikely to be getting the best from your paid campaigns or digital solutions, in terms of measuring success and focusing improvements in the right places.

Harry also leads on the implementation of cookie consent and regulatory requirements with solutions like our partner Onetrust and with client selected platforms. Similarly, he configures call tracking solutions like our partner Infinity to make sure every ounce of value from the digital channel is being measured.

Harry's most progressive contribution to Appius R & D and client work is in the area of AI and Big Data. He is actively delivering on datalake, machine learning and AI implementations for our enterprise clients - with our main focus being on how companies can create their own AI with their own data, to add value to their users by surfacing the essence of their unique organisational value add.